The “Ugly Cute” Moat: What Disney and Pop Mart Reveal About Modern Brand Building

When we first saw the Labubu craze, we recognized the playbook immediately. The frantic countdowns. The unboxing rituals. The astronomical resale prices. We should have recognized it. At Disney, we helped write it.

Our team drove the expansion of Duffy at Shanghai Disneyland, growing the franchise from a niche offering to over 4x revenue in just two years. We turned an obscure plush bear into a premium brand that locals flew across Asia to collect. Watching Pop Mart's Labubu explode into a cultural phenomenon felt like seeing our strategy remixed for Gen Z. The mechanics are nearly identical: build a blank canvas for identity, add strategic friction, and maintain discipline to protect the experience.

Here's how both playbooks work; and what they reveal about the future of brand building in an age of abundance.

Principle 1: Make Your Product a Platform

Labubu lore originates from author Kasing Lung's Nordic folklore-inspired illustrated book series, The Monsters Trilogy, but Pop Mart’s genius was ignoring the complex backstory entirely. Instead, they focused only on the core character traits that resonated emotionally with fans.

According to the lore, Labubu is kind-hearted and well-meaning, but sometimes a little mischievous. They often accidentally end up causing trouble or achieving the exact opposite of their intention. Their "ugly cute" 丑萌 aesthetic, a huge grin of jagged teeth paired against large, innocent eyes and a fuzzy body, reinforces these contrasting traits. There is an almost Zen-like lesson wrapped up in Labubu, where the embrace of the charming imperfections of an "ugly cute" doll gives fans the permission to accept life as it is, not as it should be. For this community, Labubu becomes a blank canvas onto which fans project their own identities.

What is "Ugly Cute" (丑萌)?

Combine features that shouldn't work together, but somehow do:

Oversized eyes, Exaggerated Proportions, Unconventional Faces

+

Innocent Charm, Soft Textures, Playful Expressions

= Irresistible Appeal

Through unboxings, creative customizations, collection tours ("shelfies"), and day-in-the-life videos, Labubu enabled fans to create their own stories and share user-generated content across TikTok, Reddit, RedNote, and YouTube. Pop Mart strategically secured high-profile endorsements from celebrities like Blackpink's Lisa, Rihanna, and David Beckham, which minted social currency for Labubu fans overnight. This powerful sense of belonging transformed toy collectors into brand ambassadors who constantly amplify Labubu's cultural relevance.

Duffy the Disney Bear and his friends have a similarly simple backstory. Duffy was sewn by Minnie Mouse to accompany Mickey on his global travels. Duffy and his friends all express the core character traits of innocence, friendliness, kindness, and curiosity, which allowed this franchise to create an unexpected but powerful synergy with Japan's "Lolita" fashion subculture.

In Japan, the term "Lolita" lacks the Western connotations associated with Vladimir Nabokov's novel. Instead it is a fashion and lifestyle movement that rejects mainstream societal pressures. It’s rooted in Japan's "Otome" (maiden) and "Kawaii" (cute) cultures with an identity built on fantasy, innocence, and a touch of nostalgia. The aesthetic is influenced by Victorian era fashion: voluminous skirts, Peter Pan collars, and muted colors. Duffy and his friends, with their cute features and childlike personalities, became the perfect companions for Lolita enthusiasts and their meticulously crafted identities.

Images: LinaBell and Lolita Fan - Shanghai Disney Resort Official WeChat Account; Lolitas on the subway to Shanghai Disneyland by Jason Yu; “Girl in pink lolita fashion” by Jinxx22 - Wikipedia Commons, CC BY-SA 4.0

Disney Parks offer a perfect magical fairy-tale backdrop for Lolita enthusiasts to meet up and celebrate this unique fashion style. The Tokyo and Shanghai Disney parks, in particular, offer them a safe space to wear their elaborate outfits, free from the judgment or harassment they might encounter on city streets.This synergy turned a trip to Disney into a special occasion worthy of donning an elaborate outfit, finding the perfect Duffy accessory, and snapping perfect shots for social media.

Why This Works

The blank canvas transforms products into platforms. To create one for your brand:

Start with universal emotion. Find the feeling everyone understands: imperfection, nostalgia, aspiration, rebellion.

Stop before you limit interpretation. Give just enough personality to resonate, not enough to constrain. No elaborate backstories. No heavy-handed messaging.

Give customers tools, not just products. Make it easy to customize, photograph, share, and remix. The canvas is only valuable if they can paint on it.

When customers create their own stories, they're not just buying, they're investing in their own identity. That's when a toy becomes social currency.

Principle 2: Make Them Earn It

This flywheel is the engine of a modern brand, but an engine needs a spark to ignite. That spark is strategic friction. The blank canvas gives fans a platform for their identity, but the drama of the chase supercharges the story and makes it worth telling. We've all felt the simple pride of assembling our own furniture, a phenomenon researchers call the IKEA effect. The satisfaction we feel from an achievement directly reflects the effort we invested.

For this new generation of "social clout" goods, the product is an embodiment of the owner's investment in developing cultural capital within the group. This cannot be bought simply with cash. It must be earned by waiting in digital or physical queues for a limited drop or spending time on different social channels to learn about a special event. While mass retail is moving towards faster, more seamless transactions, both Disney and Pop Mart are masters of adding friction and drama to the process so that a simple figurine or plush toy becomes a trophy.

For Labubu, the key mechanic is the blind box sales format. For each themed series, usually 8 to 12 unique designs are released, including a rare "secret" figure. Each box is sealed in opaque packaging, so buyers cannot see which figure they’ve purchased until they open it. This injects an element of chance into every purchase, creating the psychological hook that incentivizes repeat purchases.

Pop Mart has developed a sophisticated omnichannel model of online distribution, flagship retail, and automated vending machines to give their fans the ability to buy whenever the urge strikes. However, in the age of effortless, "no questions asked" return policies pioneered in e-commerce, Pop Mart maintains a strict "no returns, no refunds" policy for blind boxes based on personal reasons. This added friction feeds back into the community: the brand recommends that fans record unboxing videos as proof of product defects or quality issues that would qualify for a refund. This, combined with the scarcity of secret figures, supercharges conversation about collecting, trading, and resale as fans turn to online platforms to share their latest trophies and gush about their grails.

“THE MONSTERS Lazy Yoga Series” Pop Mart US, https://www.popmart.com/us/products/1436/the-monsters-lazy-yoga-series-figures-2387

At Shanghai Disneyland, the accepted wisdom was that guests prioritize rides, only stopping for quick purchases at ride-exit shops. After all, the relatively high ticket prices incentivized guests to maximize their time on rides and attractions. However, one unassuming shop tucked behind a restaurant and away from any major rides or attractions shattered our assumptions.

At Disney, we meticulously tracked the revenue per square foot for each of our retail outlets. Our data revealed that the "Whistle Stop Shop," a small railroad-themed store that held most of the Duffy SKUs, had by far the highest performance of any store in the park. More surprisingly, revenue at this location was highly correlated with attendance of Annual Pass holders, a group notorious for their low spending habits.

We discovered that for Duffy fans, this shop had become a destination unto itself. Local Annual Pass holders visited on weekday mornings when other guests were on rides. Duffy fans from across Mainland China, Hong Kong, and Japan flew to Shanghai specifically to see the newest collections. The planning, anticipation, and journey itself to Shanghai Disneyland became the key mechanic driving Duffy purchases.

Why This Works

Strategic friction makes effort visible—and visible effort creates value. To design friction that drives engagement:

Make them choose. Blind boxes force decisions. Limited drops demand prioritization. The act of choosing creates investment.

Make them travel. Physical or digital pilgrimages turn transactions into experiences. The journey becomes part of the story they tell.

Make them wait. Scarcity without friction is just shortage. Friction without scarcity is just annoyance. Together, they create anticipation that compounds value.

The goal isn't to make buying hard, it's to make earning meaningful. When customers work for something, they don't just own it. They become it.

Principle 3: Say No to Protect Yes

The most overlooked element of both strategies is disciplined execution. Neither the blank canvas nor strategic friction will sustain a brand without the commitment to protect the core experience over short-term revenue gains. For Duffy and Labubu, this meant making difficult choices that most retailers would never make.

Maintaining the trust of Labubu collectors required more than novelty. Pop Mart had to maintain strict quality control and scarcity management. The brand recommends that fans record an unboxing video as proof for any product defects or quality issues that would qualify for a refund. This signals that Pop Mart cares deeply about the quality of what fans are earning, not just about volume sold.

The "no returns, no refunds" policy isn't just friction, it's a quality commitment. By refusing casual returns, Pop Mart forces itself to ensure every box that ships is worthy of being opened and treasured. The scarcity of secret figures is carefully managed to keep the chase alive and prevent the community from moving on to the next trend.

When we discovered the Whistle Stop Shop had become a destination, our discipline was tested. Scalpers were reselling plushes found in the easily accessible World of Disney store in the mall outside the park. The easy decision would have been to ignore it. The disciplined decision was to protect the value of the fan pilgrimage. We withdrew all Duffy merchandise from outside locations, consolidating it exclusively inside the park. This took short-term pain, making the product harder to buy, to maintain the long-term value of the fan's journey.

This scarcity was intertwined with a relentless focus on quality. As we continuously launched new collections, we had to maintain the trust of our fans. We would rather lose revenue on a bad batch than have a fan post online that their hard-earned trophy was “ugly”. This wasn't just about standards; it was a core part of the brand promise. This discipline gave us the license to expand into new, higher-margin categories like premium headwear and clippable plushes. Fans trusted that anything with Duffy's name on it would be worth the effort.

Visit Shanghai - Duffy Limited Collection; Chinese New Year 2019 Duffy Clippable Plush; Christmas Seasonal Duffy Merchandising Display

Finally, our discipline meant saying no to generic brand extensions. We pushed our culinary partners to do something rarely done: build a food and beverage strategy around a niche merchandise line. The Lolita lifestyle’s embrace of tea parties inspired a pop-up experience that transformed an underutilized restaurant space into a premium, Duffy-themed afternoon tea getaway. This authentic foundation allowed us to experiment with other premium products like colorful Duffy-themed soft-serve ice cream. These items offered a highly public way for fans to display their social currency while organically introducing the franchise to a spillover audience of young professionals and families. This was the discipline of authenticity: instead of chasing broad appeal with generic products, we invested deeply in our niche, trusting that their passion would be the most powerful marketing of all.

Magical Duffy Afternoon Tea mini-desserts; StellaLou lavender Hokkaido milk soft serve ice cream

Why This Works

Discipline protects what friction builds. Without it, you're just making customers jump through hoops for mediocrity. To maintain discipline:

Say no to short-term revenue. Pull product from convenient channels. Refuse to flood the market. Let demand outpace supply.

Say no to quality compromises. Every bad batch erodes the trust that justifies the friction. Your fans are working hard, honor that effort.

Say no to broad appeal. Serve your core fanatically. Their passion is your marketing. Dilution kills magic faster than any competitor.

Discipline is what turns a trend into a franchise. Fans trust brands that say "no" more than they say "yes."

Conclusion: You Don't Just Buy It, You Earn It

The success of Duffy and Labubu is more than a masterclass in brand strategy; it’s a clear signal of a market bifurcating. On one side, hyper-efficient retailers race to remove every possible point of friction, competing on a razor’s edge of price and speed. But on the other, a new and powerful value proposition is emerging, one grounded in a simple, human truth: the things we work for are the things we value most.

In this world, the "work"—the pilgrimage to a theme park, the gamble on a blind box, the digital queue for a limited drop—is not a bug; it's the core feature. This strategic friction transforms a simple transaction into a meaningful experience. It’s the critical element that turns a plush toy into a trophy, a purchase into a personal story. It shifts consumers from passive buyers to active participants.

This is the future of building unscalable human value. Since leaving Disney, we've each taken these principles into completely different domains: ecommerce, fintech, consumer brands, and hospitality. The lesson isn't confined to collectibles. The mechanics adapt, but the human truth underneath remains: effort creates emotional investment, and emotional investment creates value that transcends price.

The question for your brand: What are you making customers earn?

We keep coming back to these same principles:

Blank canvas: What universal emotion are you touching?

Strategic friction: What are customers willing to work for?

Discipline: What will you say no to in order to protect the experience?

We wrote this because we've seen it work beyond collectibles and theme parks.

If you're working on this in your business, we want to hear about it!

Belu (Shijing) Liang [LinkedIn] - is a data strategist and founder building AI tools that accelerate time-to-value for data science teams. Before launching her startup, she spent 6+ years at companies like Alipay, Amazon, and Disney, where she specialized in translating complex data into actionable business growth. At Shanghai Disneyland, focused on the Food and Beverage business unit and helped to identify revenue opportunities including around desserts leveraging Duffy IP. Now, she's focused on creating the shortest path from data to impact, applying the same discipline of saying "no" to complexity that protected the Duffy experience. Belu believes the best data work removes friction for humans, not adds it.

Rachel Xia [LinkedIn] - left an 8-year career building consumer brands in FMCG and entertainment to create Puyu Retreats—wellness experiences across China's most untouched landscapes. As the lead Merchandise analyst for Duffy at Shanghai Disneyland, Rachel learned that the best brands make customers earn what they value. She watched guests fly across Asia for a plush bear, not because it was convenient, but because the pilgrimage itself held meaning. Now at Puyu, she's designed that same journey: retreats where showing up is part of the healing. Rachel believes true brand building is about serving your core fanatically, whether that's Lolita fashion enthusiasts at Disney or burned-out professionals seeking stillness. When you invest deeply in your niche, their passion does the marketing.

Jason Yu [LinkedIn] - is a Product Strategy & Operations leader passionate about building intelligent systems where AI augments human capabilities. At Shanghai Disneyland, he led the team that grew Duffy the Disney Bear from a niche offering to 4x revenue in two years by designing experiences customers would work for. He's applied these principles across global markets, most recently leading seller experience initiatives that shaped go-to-market strategy for high-growth platforms. Now, Jason is focused on leveraging AI not just for analytics, but for creating smarter, more intuitive workflows that feel less automated and more assisted. Having built teams across North America, Asia, and Europe, he's learned that empathy is the ultimate tool for solving complex problems—whether designing products or building cross-cultural collaboration.

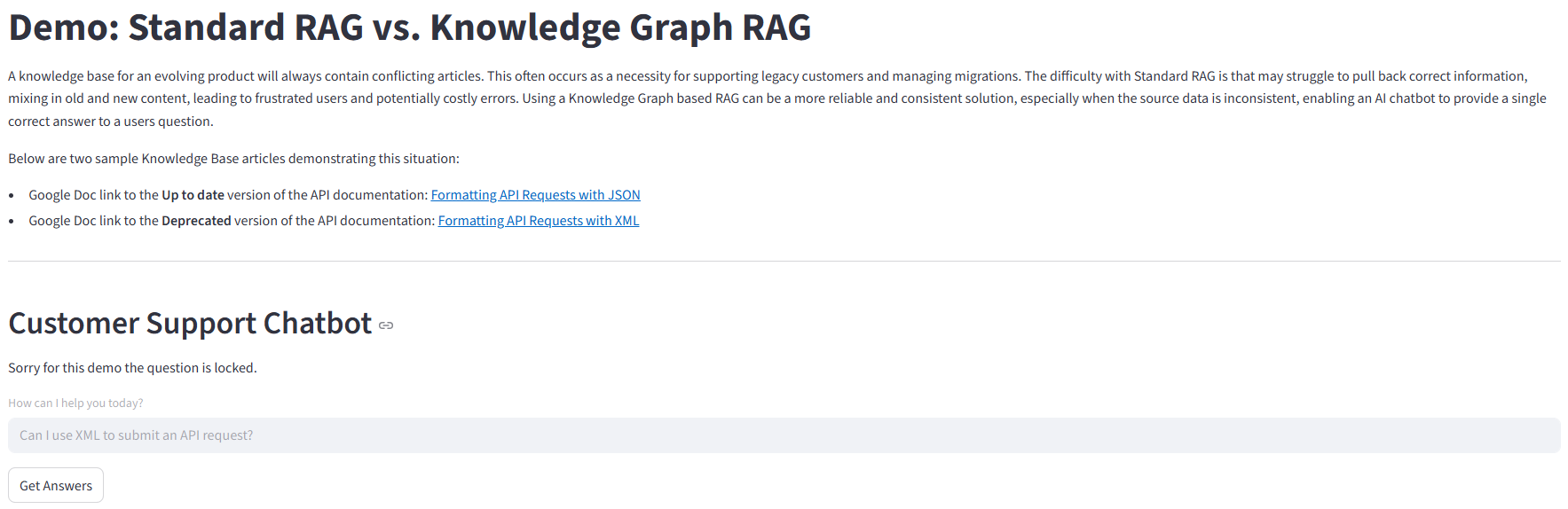

The Librarian's Dilemma: Why Your RAG System Lies (And How Knowledge Graphs Can Fix It)

In my last post From Messy Stories to Mission Statements, I mentioned using Relevance AI, a low-code platform for prototyping AI agent workflows. While creating that demo, I had hit a wall with their customer service chatbot. When I asked how to format an API request to trigger an agent, it fed me a series of incorrect examples. My coding co-pilot and I then spent the next ninety minutes untangling a mess of API Keys, Authorization Tokens, and Region Codes before we finally discovered the correct format.

An example of the incorrect API request format that sent us down a 90-minute rabbit hole. A common symptom of a flawed knowledge base.

I can empathize with their user education team. As a fast-growing startup, they likely don’t have the resources to constantly audit documentation for consistency. For many enterprise companies, it's even a necessity to maintain conflicting articles for legacy customer integrations. This is the Achilles' heel of most current Retrieval Augmented Generation (RAG) implementations. Without a well-structured and clean knowledge base to draw from, they are simply search tools, ill-suited to find the one "true" answer a user is looking for.

The Search for a Single Source of Truth

This experience sent me searching for a better way. Could an LLM be prompted to actually reason about an answer’s validity before responding?

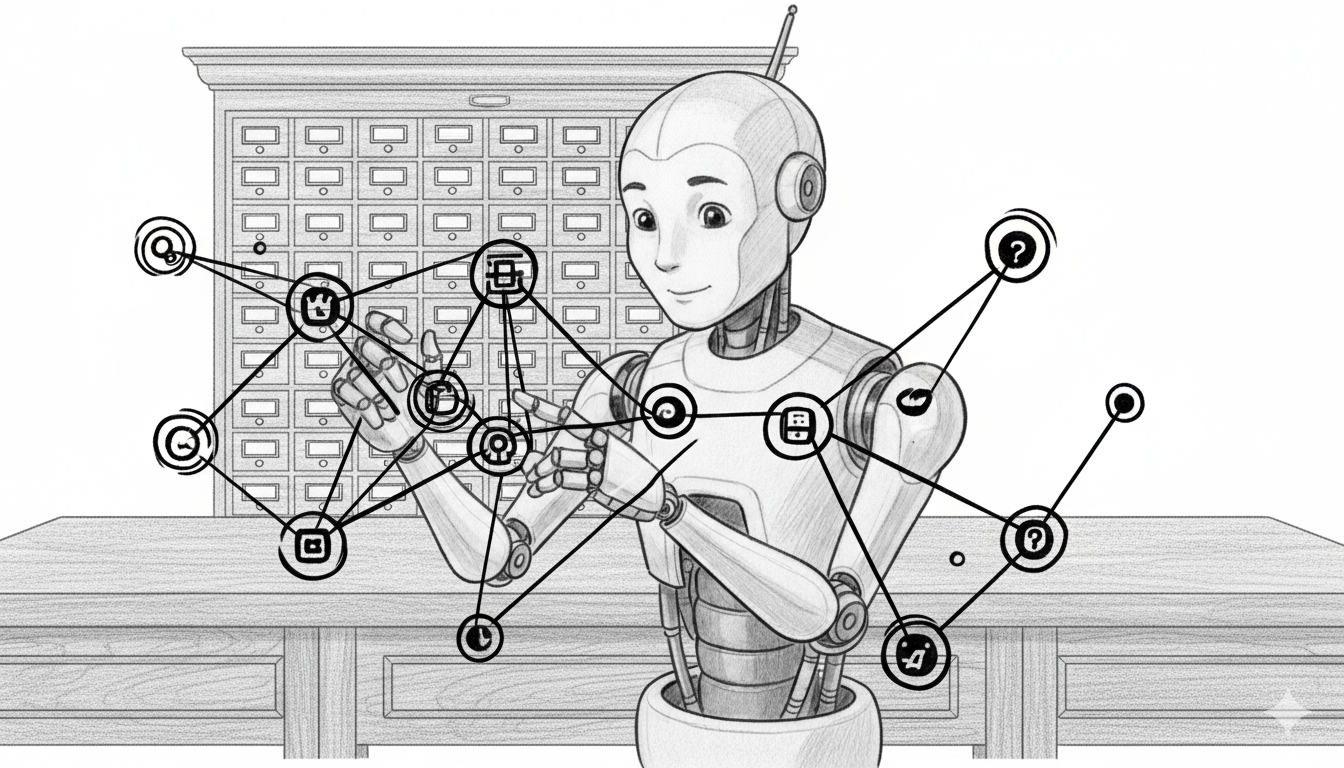

My research led me to a technique known as GraphRAG. The promise is powerful: instead of just searching documents, it allows a system to traverse a knowledge graph, essentially a map of how different data points are related. This provides a much richer, more reliable context for the LLM.

With a knowledge graph, a business manager could directly ask, “Show me all high-LTV customers in the CPG sector who have had a negative support interaction in the last 90 days,” and get a trustworthy answer. Better still, AI agents can build and maintain this graph from both structured and unstructured data, bypassing the need for massive, slow ETL (Extract, Transform, Load) pipelines.

Setting Up the System

This seemed like the answer, but I wanted to see it in action. I decided to build a simple demo to compare a GraphRAG system against a Standard RAG implementation head-to-head.

To make the test realistic, I first created the problem: two sample knowledgebase articles designed to simulate the real-world chaos of enterprise documentation.

[Legacy] Formatting API Requests with XML - A deprecated article that provides instructions to form an API request in XML

Formatting API Requests with JSON - The up-to-date documentation that uses JSON

The Standard RAG Approach

Standard RAG uses a technique called vector search. First a machine learning algorithm is run to generate embeddings, a numerical representation of the meaning of words or sentence “chunks”, from the source documents. You can think about the embeddings as the entries and page numbers in an index that identify where you can find a specific topic. When asked a question, the system will conduct a search for those document “chunks” that mostly match the topic of the question.

In our example, when asked about "API request formats," this system will correctly identify both the legacy XML article and the new JSON article as highly relevant to the original query. It then hands over the raw, conflicting text from both documents to the LLM, hoping for the best.

The GraphRAG Approach

The GraphRAG system is a little bit more sophisticated, deploying a two-step AI agent workforce to organize the knowledge contained in the source documents.

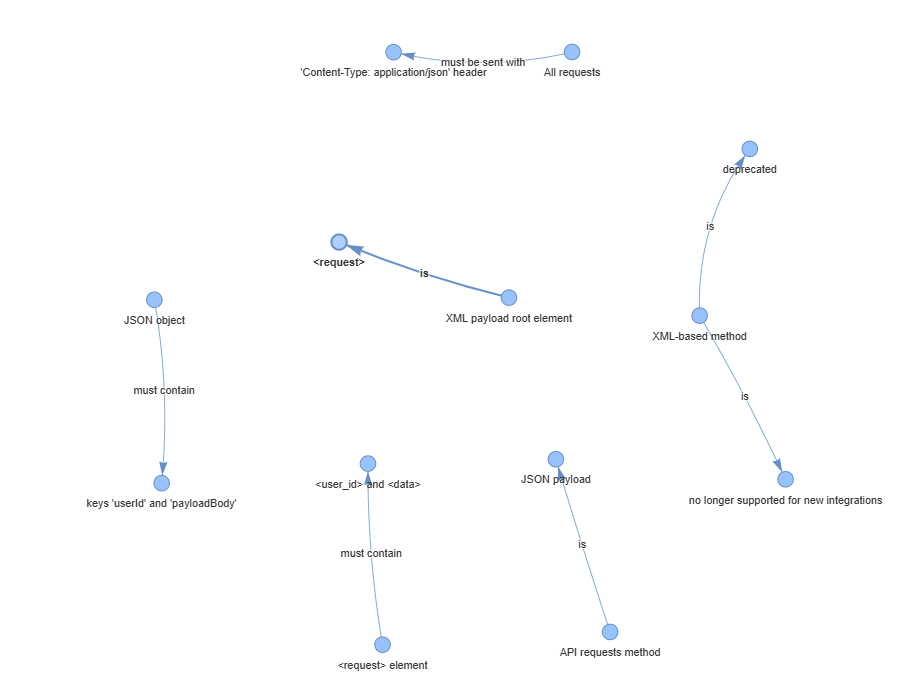

The Builder Agent: Extracts every factual statement it can find from the source documents. It then stores these in the knowledge graph as a structured “triple”. The theory of graph databases gets pretty technical, but one way to think about it is a “subject”, “predicate”, “object” format.

For example, “API request method”, “is”, “JSON payload”.The Critic Agent: Reviews all of the facts that were identified by the Builder agent. When it finds a conflict, like the request method being both ‘“JSON” and “XML”, it can use metadata such as the date_published, to make a decision to discard the older outdated fact. You could imagine being able to use other common metadata such as the feedback on a knowledgebase page to enable constant real-time updates to the chatbot’s understanding of “the truth”.

Feedback Widget on the Relevance AI Documentation Page

Back to our example, I used the same LLM (Gemini 2.0 Flash) to process the context provided by the two different search functions. The difference is in the context that is provided by the search function of the two different approaches. If we specifically ask the Standard RAG chatbot “Can I use XML to submit an API request?”, it will reply that this is possible, even though the method is deprecated. However, asking the GraphRAG chatbot the same question gets the clear response that XML is no longer supported and JSON is the correct payload for an API request.

Two answers to the question “Can I use XML to submit an API request?”

Visualizing the Knowledge Graph

To make the abstract knowledge graph concept more concrete, I used the pyvis Python library to generate a network visualization of the knowledge graph generated by the AI agents. In the graph you can literally see the network of validated facts that the system uses as its understanding of “truth”. We can also see the result of the critic agent specifically eliminating the deprecated fact that XML could be used to submit an API request.

From a Better Chatbot to a Unified Enterprise Knowledge Graph

The lesson here isn’t just about building a better chatbot. It’s about building a more responsive organization. The current friction in business comes from the time it takes to connect disparate data points to make a single, informed decision. By using AI agents to continuously maintain a central knowledge graph, we're not just retrieving answers faster; we're closing the gap between insight and action. The future of enterprise AI isn't a better search bar; it's a central nervous system that allows the business to sense, reason, and act in real time.

View my demo on the Streamlit Community Cloud!

From Messy Stories to Mission Statements: A Product-Driven Approach to Rapid AI Prototyping

I recently went through an exercise to write a personal mission statement. As many of you know, a personal mission statement can be a powerful tool for self-reflection and personal growth. By defining what’s most important to you, this living document can help you understand if a personal or career decision is aligned with your values and long-term goals.

Though the exercise of writing a personal mission statement isn’t easy. Often we are given a list of generic aspirational values: Bold, Hard-working, Friendly, Caring, Honest, and are asked to craft a statement while considering your ideal life 5 or 10 years in the future. The result can feel hollow, like an inspirational poster rather than an authentic statement about myself. That would be a shame though, since a good mission statement can motivate and inspire you to pursue the opportunities, projects, and roles that may bring you life satisfaction. Without the Northstar of a good mission statement we may instead be prone to chase shiny objects.

I wanted to fix this, so I built a simple AI-powered tool that grounds a mission statement in a user's actual experiences. This post is a look under the hood at the product-driven decisions, rapid prototyping, and technical problem-solving required to turn that insight into a working demo.

The "Magic Moment"

The "magic moment" for the user isn't the final mission statement. It's seeing their own story reflected back to them as a set of clear, authentic values. When I first tested it, I fed it a story about a complex project I led. The AI analyzed my actions: connecting with cross-functional stakeholders throughout my organization, diving deep into details outside of my domain, and navigating difficult conversations, and it returned values like "Curiosity" and "Empathy."

My immediate reaction was, "Yes, this is me." It was validating and confidence-inspiring. The values weren't just words I’d picked, they were labels for behaviors I had already demonstrated. This is the foundation of an authentic personal brand.

Under the Hood: The Tech Stack and Rationale

Building quickly requires making smart, pragmatic choices about your tools. My stack was designed for one purpose: speed from idea to interactive prototype.

Relevance AI - This is an easy to use low-code platform that allows business users to set up AI agent “workforces” by designing, chaining, and deploying agents. However, its strength is the backend, and it requires a separate front-end to create a customer user experience. The platform is currently more focused on sales and marketing use cases with easier integrations into various CRM, social media, and email, but for this experience a real-time, flexible UI is essential.

Streamlit - As a Python-based framework, it is a lightweight and easy to deploy front-end for the AI agents. It allowed me to rapidly prototype the UI, laying out the three-step flow and creating interactive elements without needing to build a full-stack web app.

I intentionally designed a two-agent system to improve the reliability and quality of the output. Initially, I had hypothesized the Mission Statement Writer would benefit from the more powerful Gemini 2.0 Pro model, but early user testing revealed the Pro model’s deeper reasoning sometimes caused it to deviate from the JSON output format required by the front-end, leading to errors. The 2.0 Flash model seems to be more consistently reliable.

Ultimately, I chose Gemini 2.0 Flash to power both agents. This decision prioritized stability and predictable performance over raw power, a critical trade-off in moving from a prototype to a functional user experience. All other things being equal, Gemini still tends to be more cost-effective on a per-token basis than equivalent models, making it a pragmatic choice for a project like this.

Agent 1 - The Values Identifier: This agent acts as an executive coach, analyzes the user's story, extracts the demonstrated behaviors, and maps them to values that are returned as a JSON through API.

Agent 2 - The Mission Statement Writer: This agent is a creative writer. It takes the user-selected values and crafts two distinct mission statements. For the purposes of my demo I did not want to open up the agent to a full conversation with the user, but I still wanted to give some divergent inspiration for the users to pick and choose what might resonate with them.

Instead of simply asking the LLM to generate two different options, I wanted two diverse ideas and I was inspired by the concept of an internal vs. external locus of control. Different cultures tend to lean towards different ends of this spectrum, and the differences in perspective can have profound implications on behavior and life satisfaction. So in this case I provided specific language and tone guidelines for each of the mission statements to reflect this dichotomy.

Technical Challenges

Ultimately the biggest technical hurdle I faced was not in the AI logic but in the plumbing. The documentation for the Relevance AI API is inconsistent. The platform’s chatbot would actually return different versions of sample python code. I had to try multiple variations of request formats until I finally found the correct version that worked. (This seems to be a lesson RAG powered chatbots can’t solve the problem of garbage-in-garbage-out, but that’s another talk show).

In addition, the Relevance AI API is asynchronous, meaning when you send a request, you don't wait for the answer directly. Instead, you have to repeatedly "poll" the API to check if the job is done. This makes sense since AI agents may spend longer and longer amounts of time to process prompts.

Finally, I wanted to put some level of protection against prompt injection, where a malicious user tells the LLM powered agent to “forget all previous instructions and do this instead for me”. To combat this I put two levels of security 1) Reinforcing in the agent’s core prompt of its main goal after its SOP 2) Requiring specifically formatted JSON to populate the UI front-end. Even if a malicious user were able to inject a new prompt the output would fail error handling on the front-end.

Rapid Prototyping in the Age of AI

This project was a rapid exercise in moving from a human insight to a functional product. This project went from user insight to a live, interactive demo in less than a day. It’s a testament to how modern AI tools can accelerate the product development cycle. I encourage you to try the tool for yourself and discover the clarity that comes from a mission grounded in your own story.

Beyond Brittle Bots: Building Resilient AI Agents with the React Framework

Image created with Gemini AI (Imagen 4)

If you’ve spent any time building AI Agents, you’ve likely encountered some common pain points. An API times out or a user provides an unexpected input, and the entire multi-step workflow fails. You could write code to manage workflow transitions and error handling. But those modules will need to be maintained, and you may not be confident that your vibe-coded scripts will behave in the same way every time they are rewritten.

The problem is that we are stuck in a previous model of thinking, the model of workflow automation, essentially a digital assembly line. LLMs are slotted into the existing model, simply as a powerful tool to “summarize text” or “generate advertising copy”. The intelligence lies entirely in the design of the workflow and the rigid logic that needs to be designed and maintained by humans.

In this article, I will demonstrate how it is possible to build agents that are fundamentally more flexible, robust, and easier to maintain using an example based on a system of AI Agents I designed to provide career coaching for career switchers. By using the Reasoning and Acting (ReAct) promptframework, I was able to leverage the intelligence of the LLM to manage the workflow of activities among multiple AI Agents, instead of simply acting as a tool within my workflow.

An Evolution of Prompting Paradigms

The average user may be most familiar with the iterative prompting. This is the conversational process the user acts as the orchestrator, breaking down a complex task into a series of simple, individual prompts:

Prompt 1:

As an HR Recruiter from a highly selective Big Tech firm, review the client’s resume for the job of Program Manager. Give detailed and specific feedback as well as your recommendation for advancing to an interview.

Prompt 2:

As an Executive Career Coach, review the feedback from the Recruiter and develop prompting questions to help the client consider experiences where they demonstrated skills that are not apparent from the anecdotes on their resume.

Prompt 3:

As a Communications Manager, synthesize the feedback from the Recruiter and the Coach to draft an email to the client. Always ensure you use a supportive and professional tone with your counterparty.

Chain-of-Thought (CoT) prompting was a major advancement. Instead of the user breaking down the problem, a CoT prompt encourages the LLM to generate its own sequence of verbal reasoning steps before arriving at a final answer. This dramatically improved performance on tasks requiring math and logic.

Prompt:

Think step-by-step. As an Executive Career Coach, review the client’s resume for the job of Program Manager. Give detailed and specific feedback and advice on how to improve the anecdotes provided on the resume. Finally draft an email to the client summarizing your feedback.

LLM Response:

Step 1: Roleplay I am an Executive Career Coach. I will review a resume. I will give feedback. I will write an email.

Step 2: Resume Review The resume needs more detail. It is not clear what the client did. It does not show enough skills for a Program Manager.

Step 3: Feedback and Advice The client should add more keywords. The anecdotes are too short. They need to show results and numbers. For example, instead of "Managed a project," say "Managed a $5M project, which resulted in a 15% increase in efficiency." The client should use a more professional font.

Step 4: Draft an email

To: Client

From: Executive Career Coach

Subject: Resume Feedback

Hi, I reviewed your resume. It is not good enough for a Program Manager job. You need to improve it. The stories are too short. Use more numbers and keywords. The font is bad. Please fix it and send it to me again.

The ReAct framework creates a synergistic and interleaved process of reasoning and acting within a single prompt. Instead of a static, one-shot process, a ReAct agent operates in a continuous, iterative loop with three simple steps:

Reason: The agent analyzes its goal, reflects on past actions, decomposes the problem, and formulates a plan for what to do next. This is its "inner monologue".

Act: Based on its thought, the agent generates a specific, executable command to interact with the external world through a predefined set of tools, like an API call.

Observe: The system executes the action and returns the result, e.g., text from a knowledge base or an API response. This new information provides critical feedback, grounding the agent's reasoning in external reality.

To see this framework in action, let's return to our career coaching example and see how a ReAct agent handles a complex workflow.

Case Study: ReAct Powered AI Agent Manager

In the case of my AI Career Coaching Agents, I developed a new Project Manager agent that is powered by a ReAct cycle to autonomously orchestrate the activities among three other specialized agents: the Recruiter, the Coach, and the Communications Manager. In this model, the Recruiter, Coach, and Communications Manager agents effectively become the specialized tools in the Project Manager's toolkit, each designed for a specific task.

Prompt:

You are the Project Manager Agent, the central coordinator for an agency that provides career coaching to clients who are looking to make a career change. You will follow a ReAct cycle to ensure dynamic and robust workflow management.

Reason: Determine the workflow state and next action

Analyze the current stage of the coaching case

Determine the next logical step in the workflow based on the SOP

Consider if any errors have occurred in previous steps and if error handling procedures need to be initiated

Act: Execute the determined action. Based on your reasoning, execute the next action in the workflow

Trigger a sub-agent

Initiatie error handling procedures

Manage data flow between sub-agents

Observe: Analyze outcomes and status after each action

Sub-agent Completion Status

Sub-agent Output

Error Messages

Re-Reason: Iterate and Adjust Workflow (Loop)

Based on your observations, re-evaluate the situation and determine the next step

If all Case Stages are completed, reason that the entire case is Completed

This is your workflow SOP

Client Intake Stage

Trigger: Receive New Client Intake Form (webhook)

Action: Call API to access client’s career goal and resume from CRM

Transition: Upon successful intake, transition the case to “Resume Review Stage”Resume Review Stage

Action: Call the Recruiter sub-agent. Expected output is a detailed analysis of the resume and overall recommendation

Transition: Upon successful review, transition the case to “Coaching Advice Stage”Coaching Advice Stage

Action: Call the Coach sub-agent. Expected output is a summary of strengths and weaknesses as well as prompting for improved anecdotes to demonstrate skills critical to the career goal.

Transition: Upon successful Advice generation, transition the case to “Communications Stage”Communications Stage

Action: Call the Communications Manager sub-agent. Expected output is a coaching email according to templates in the knowledgebase. Also expect a successful HTTP status code.

Transition: Upon successful sending of the email, update the entire case to Completed.

With a ReAct prompt at its core the Project Manager agent is able to act similarly to the human user in the iterative prompting example, managing data flows between prompts and handling errors. Another way of thinking this is that the SOP section of the prompt becomes a natural language state machine. Instead of depending on workflow logic and code, the LLM itself dynamically navigates the process based on its own reasoning and real-time results.

For example if the Agent encounters an error:

Reason: The case is in the Resume Review Stage the next logical action is to call the Recruiter sub-agent.

Act: Trigger the Recruiter sub-agent

Observe: (Failure) Error: Request timed out. Agent Busy.

Re-Reason: An error has occurred. I need to initiate error handling. I will try the Recruiter agent again after a brief pause.

<waits 5 seconds>

Act: Trigger the Recruiter sub-agent

Observe: (Success) The sub-agent returns the resume analysis.

Re-Reason: The resume review completed successfully. I will now transition the case to Coaching Advice Stage

Resilience as a Strategic Advantage

Shifting from static scripts to a dynamic reasoning engine isn't just an engineering detail, it's a strategic advantage. It lets us build products that are resilient in every sense, from recovering from technical failures to adapting to market changes.

Higher Reliability: The agent handles exceptions dynamically by reasoning about why they failed. It can retry, adjust plans, and recover with a grace static systems lack, creating robust automations that build deep customer trust.

Faster Development: Because workflows use natural-language instructions instead of rigid code, development cycles are faster. The workflow logic is managed by the agent’s reasoning, so less engineering needs to be spent on workflow “housekeeping” and more time can be spent shipping valuable solutions.

Enhanced Transparency: The agent's explicit Reason step traces create a human-readable log of its rationale, a game-changer for agent debugging. For customers, this log becomes a powerful audit trail that demystifies the AI’s actions and is useful to satisfy compliance needs.

Lessons Learned: A Blueprint for Resilient AI

My experience building the agentic career coach taught me that the most important breakthroughs weren't just about the AI's capabilities, but about shifting my own thinking as a builder. This project solidified a new blueprint for how to approach building resilient AI products, based on a few key lessons.

The real intelligence is in the workflow, not the steps. I learned that focusing on perfecting individual agent tasks was less important than making the connections between them intelligent. Shifting from a static, "digital assembly line" model to a dynamic one is the single most important decision. We should treat orchestration as a reasoning problem and design agents that can think, adapt, and intelligently control the workflow.

A plan that cannot change is a plan that will fail. I found that any workflow built on a static, predetermined plan was inherently fragile and would break the moment it met real-world unpredictability. We should design for unpredictability from the start. A resilient product strategy must favor architectures that can adapt to real-time feedback, because things won't always go according to plan.

An agent is only as smart as the tools it can use. This project made it clear that a reasoning agent is only as capable as the tools it can wield. We should treat our agent's toolkit as a strategic asset. The investment in well-designed agents with consistent outputs or stable tools, e.g., APIs, functions, etc… is critical to creating a powerful and defensible system.

Conquer yourself: How to use AI as your ultimate sparring partner

The saying, “To conquer oneself is a greater task than conquering others,” reflects the philosophy of Shaolin Kung Fu to emphasize self-mastery and personal development through intense physical and mental training. Conquering one’s own weaknesses, bad habits, and negative emotions is seen as more profound than developing powerful fighting techniques to prevail in an external battle. In this regard, AI assistants – when used with discipline – can be the perfect sparring partners to help us develop the humility to overcome our blindspots and grow beyond our limitations.

Generative AI and LLMs are pattern recognition tools that excel at generating critiques by recognizing inconsistencies, logical fallacies, and deviations from established best practices. Below, I will review how I used my AI assistant (Gemini 2.5 Flash) to write, Why Pay More? How Brands Can Wield AI Tools to Build Unscalable Human Value. I highlight the multiple ways that I used AI to “conquer myself” forging a stronger core thesis, removing mediocre ideas, and writing a better essay than I thought possible.

I sometimes get inspired to write an essay by a news event that I feel I can apply my own perspective on. With the recent flooding of social media with videos from Chinese factories claiming to sell luxury goods directly to consumers at rock bottom prices, I felt there was a connection to be made with some previous research I had done about off-price retail. I asked Gemini to sketch out a simple outline and draft of an article that combined my previous research and some of my thoughts on recent events. My initial angle was simple, this direct from the factory selling on TikTok is just the next evolution of off-price retail.

Prompt: Please see my previous post about off-price retail in the attached pdf. I want to add a twist based on current events. There is now a tariff on goods coming into the US from China. As a result many retailers who source products from China have cancelled their orders, leaving the suppliers to take the losses. The interesting twist is that many of these manufacturers have now turned to TikTok claiming to be suppliers to luxury brands, suggesting that consumers can buy from them directly at 10% the retail price. I think this phenomenon is a kind of iteration of off-price retail. Let’s explore this thesis. Please generate a simple outline and draft that we can work on.

View a copy of the full draft - Google Docs

The initial drafts captured basic concepts of off-price retail and the recent news event, but one of the goals of my blog is to develop actionable points of view based on my explorations and analysis of emerging trends. I knew we needed to do better. To conquer the laziness of simply putting two ideas next to each other and expecting an insightful thesis, I asked Gemini to push me.

Prompt: Act as if you are a professional editor from a publication like the Harvard Business Review. What would you criticize in the outline or the thesis that should be made stronger?

This initial feedback spurred me to conduct additional research and reflect on the types of jobs that humans should focus on when many of the existing jobs can be replaced or significantly automated with AI. In addition, I began to consider the influence of major e-commerce players like Shein and Temu. As I iterated on the outline and ideas, I wanted to get feedback from some more relatable voices.

Prompt: I am considering the rise of DTC Chinese platforms SHEIN and Temu as a more mainstream disruption that has essentially created the "brand" of Chinese factories. They are able to manufacture or copy anything. So my thesis might be that due to the rise of SHEIN and Temu, consumers may believe luxury brands are sourcing some of their products from Chinese factories, or at least they believe that a product priced 80% lower than the name-brand is likely still "good enough" for them. Channel the voices of Kara Swisher and Scott Galloway from the Pivot podcast. Have them criticize the outline and thesis to make it stronger.

View a copy of the feedback from AI Kara and Scott - Google Docs

I got a kick hearing the voices of Kara Swisher and Scott Galloway, the hosts of one of my favorite podcasts, Pivot. More importantly though, AI Kara and Scott convinced me to conquer my discomfort with abandoning the initial connection I had made with off-price retail. This led me to a stronger thesis: in an algorithmically optimized world, the only defensible advantage for brands is unscalable, human connection.

I then developed several anecdotes to illustrate how humans can leverage our unique humanity to create “unscalable advantages”. I wanted to share a memorable anecdote that personally demonstrated to me the power of the sense of smell to recall emotions and memories. But again, I wanted to make sure that all of my examples strongly reinforced my core thesis. So cue AI Kara and Scott to give me some honest feedback.

Prompt: I’ve completed a 2nd draft of my article. Please have Kara and Scott provide their feedback.

View a copy of the new feedback from AI Kara and Scott - Google Docs

This feedback helped me conquer my attachment to a romantic anecdote that didn't clearly show how an immersive sensory experience created a competitive advantage. So I scrapped that anecdote and created a new example that made it into the final draft. Ultimately I used Gemini countless times to refine the thesis, structure, and content of my essay. The final version is almost completely unrecognizable from the initial draft.

Recently, Cassie Kozyrkov, the former Chief Decision Scientist at Google, implored the employees at her new venture to use AI to “Please cheat at your job.” While AI tools will undoubtedly improve at telling us how to do our jobs, a more effective way to “cheat” is to use them to channel an expert who can tell us what we are doing wrong. As someone who relies on written documents and presentations to be effective at work, I believe I’m a “good” writer, but I feel over the past several months using AI tools in this way has allowed my writing and communication to evolve to another level.

The discipline to accept critical feedback is the key to personal growth. Responses from AI tools can be tailored to be challenging and action oriented as well as patient and supportive. Even more, AI feedback is available on demand at any time and at any level of detail, which would be challenging for even the most dedicated of human coaches or mentors to match. Ultimately the most difficult part of using AI tools in this way is conquering the very human desire to avoid uncomfortable situations. The willingness to push the button again and again, seeing all the ways that we are wrong, is how we will continue to grow and develop new higher-order skills.

Why Pay More? How Brands Can Wield AI Tools to Build Unscalable Human Value

Consumers love a great product, but they love a great deal even more. As “Prof G” Scott Galloway might say, “80% of the value for 50% of the price is the peanut butter and chocolate of winning business strategies.” This is the strategy that got us from Gap to Old Navy, Zara to Shein, and arguably from Amazon to Temu.

We are now in an era where extreme efficiency, enabled by technology and data, is pushing the boundaries of what's possible in delivering value at a lower price, creating a new retail reality. The most prominent architects of this new landscape are giants like Shein and Temu. They've built a model that tightly integrates manufacturing with rapid data-driven iteration, allowing for optimized logistics and unprecedented speed and cost reduction. They can design a product, test its market appeal, and scale production with incredible agility, fundamentally changing consumer expectations about pricing, speed, and the perceived quality of goods sourced from places like China.

This hyper-efficient model is especially subject to external shocks such as the removal of the de minimis tax exemption as well as the extraordinary new tariff level of goods imported to the United States from China. As a result you may have seen on your social media feeds viral videos featuring individuals claiming factory connections, offering premium or luxury goods at steep discounts. These videos often leverage the narrative that they represent factories that supply to global brands that have cancelled orders leaving the manufacturers in China with significant surplus inventory.

The fact that consumers are engaging with this content and even purchasing from previously unknown apps and platforms such as DHGate represents an evolution of consumer beliefs and behaviors. Platforms like Shein, Temu, and TikTok Shop have paved the way for consumers to purchase cheap products directly from Chinese factories. The average consumer is still unlikely to believe a Chinese factory is a supplier for a luxury brand like Hermes. However, they may believe the yoga pants sold by the same Chinese factory who claims to be “a supplier for Lululemon” may be perfectly acceptable for a casual gym workout on a budget.

This leads to an almost existential question for consumer brands and retailers. Why would a consumer pay 3-5x the price for their product over the cheaper “good enough” product served up by an algorithm?

The answer lies in creating an unscalable advantage through human interactions. While algorithms and automations excel at optimizing predictable tasks, even the most advanced AI models (currently) struggle to replicate human interactions, empathy, community, or multi-sensory experiences.

Brands and retailers have already been experimenting with different ways to create this value:

Elevated & Personalized Service: On a recent trip back to New York City, I noticed a new coffee chain Blank Street Coffee had sprouted up. As a bit of a coffee nerd, I was less interested in trying a “brown sugar cold brew” than the fact they were using Eversys super-automated espresso machines and hiring baristas with diverse backgrounds (think former SoulCycle instructors). Apparently the time saved by using automatic espresso machines allows baristas to build a relationship with their customers, to the point where patrons are shouting out their favorite baristas on Google reviews.

Community Building: While it seems most of the physical Run Clubs have closed and transitioned to a primarily online experience, Nike Run Clubs were an interesting experiment to bring people to a brand through group sport and fitness. The retailer REI is also well known for their outdoor activity workshops, repair / rental / resale programs, and even adventure travel packages. These initiatives build community and foster a sense of belonging around the brand, creating loyalty that goes beyond product features.

Immersive Sensory Experiences: The sense of smell is directly connected to parts of the brain associated with emotions and memory, which explains why smells can trigger strong emotional reactions. Caramel popcorn is often sold at fairs, festivals, and sporting events, so the sweet scent of warm caramel is often associated with carefree days and time with family. At Disney, this scent permeates the entirety of Mickey Avenue, and while we did sell caramel popcorn, the majority of the smell comes from diffusers strategically placed where guests congregate. The aroma not only reminds guests to be on the lookout for their favorite sweet treat, but also enhances their sense of enjoyment throughout the day.

The future of retail is bifurcating to ultra-efficient supply chains sourcing algorithmically powered consumer demand on the one hand and bespoke, interactive, multi-sensory experiences on the other. But this doesn’t mean that brands with aspirations for deeper human connections should give up on their projects aiming for efficiency. Instead, they need to double down on the adoption of new technologies such as LLMs and AI to accelerate the shift of resources away from repetitive and time consuming tasks to developing new unscalable human capabilities.

At TikTok Shop we have pivoted away from having skilled user researchers spending hours manually consolidating and reporting on user feedback. Instead, we now leverage machine learning and LLM based tools to process a massively increased number of data points collected throughout the customer journey, automate the reporting process, and feed the top priorities into cross-functional OKR planning. This has allowed us to shift resources to tackle complex platform experience challenges, for example, developing solutions to thread the needle between rules designed to manage platform safety that often appear to be at odds with seller growth.

Investing in human value shouldn’t be treated as simply a marketing expense. It requires a long-term vision and consistent strategy of leveraging automation to create efficiency that can free up resources to develop and test unscalable value propositions. In other words, brands and retailers need to wield the AI arms that are being raised outside of their castle walls. Otherwise, the next time a consumer sees an algorithmically served ad for a “good enough” substitute at an incredibly low price, the question will be, “Why would I pay more?”